Windows Azure is an emerging technology that will be gaining bigger share of our life as software developers or IT Pros. Using earlier releases of Windows Azure Tools for Visual Studio there was an almost painful process of deploying application into the Azure environment. The standard Publish process was creating Azure package and opens the Windows Azure portal for us to publish our package manually. This option still exists in Visual Studio 2010, and is the only option in Visual Studio 2008. However, there is a new, slick option that allows us to publish / deploy our azure package right from within Visual Studio. This post is around that particular option.

Before we begin, let’s make sure we have installed the most recent version of Windows Azure Tools for Visual Studio.

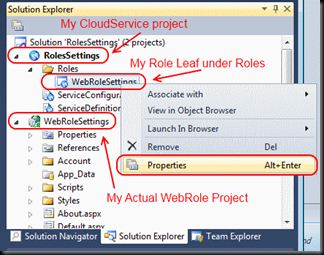

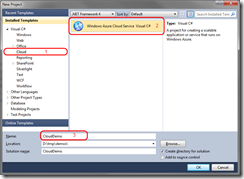

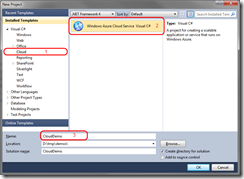

For the purpose of the demo I will create very simple CloudDemo application. Just select “File” –> “New” –> “Project”, and then choose “Cloud” from “Installed Templates”. The only available template is “Windows Azure Cloud Service C#”:

A new window will pop up, which is a wizard for initial configuring Roles for our service. Just add one ASP.NET Web Role:

Assuming this is our cloud project we want to deploy, let’s first pass the Windows Azure Web Role deployment checklist, before we continue (it is a common mistake to miss configuring of DiagnosticsConnectionString setting of our WebRole).

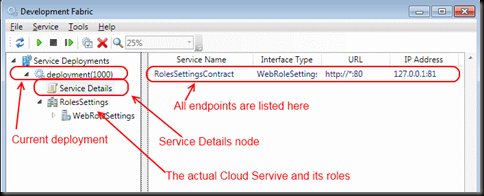

Now is time to publish our Windows Azure service with that single ASP.NET WebRole. There is initial configuration, that must be performed once. Then every time we go to publish a new version, it will be just a single click away!

Right click on the Windows Azure Service project from our solution and choose “Publish” from the context menu:

This will popup a new window, that will help publish our project:

There are two options to choose from: Create Service Package Only and Deploy your Cloud Service to Windows Azure. We are interested in the second one – Deploy your Cloud Service to Windows Azure. Now we have to configure our credentials for deploying onto Windows Azure. The deployment process uses the Windows Azure managed API that works with client certificate authentication, and there is a neat option for generating client certificates for use with Windows Azure. From that window that is still open (Publish Cloud Service) open the drop down, which is right below “Credentials” and choose “Add …”:

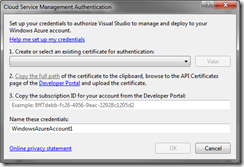

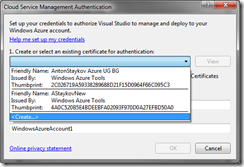

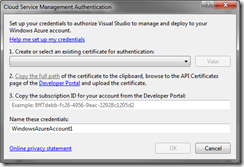

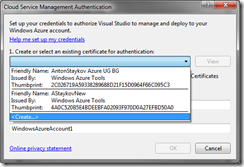

Another window “Cloud Service Management Authentication” will open:

Within this window we will have to Create a certificate for authentication. Open the drop down and choose “<Create…>”:

This option will automatically create certificate for us (we have to name it). Once the certificate is created, we select it from the drop down menu and proceed to step (2) of the wizard, which is uploading our certificate to the Windows Azure Portal. For this task, the wizard offers us an easy way of doing this by copying the certificate to a temp folder. By clicking on the “Copy the full path” link it (the full path) is automatically copied onto our clipboard:

Now we have to log-in to the Windows Azure portal (http://windows.azure.com/) (but don’t close any Visual Studio 2010 Window, as we will be coming back to it) and upload certificate to the appropriate project. First we must the project for which we will assign the certificate:

Then we click on the “Account” tab and navigate to the “Manage my API certificates” link:

Here, we simply click browse and just paste the copied path to the certificate, then click Upload:

Please note, that there is a small chance of encountering an error of type “The certificate is not yet valid” during the upload process. If it happens you have wait for a minute or two and try to upload it again. The reason for this error is that your computer time might not me as accurate and synchronized, as Windows Azure server’s. Thus, your clock may be a minute or more ahead of actual time and your generated certificate is valid from point of time, which has not yet occurred on Windows Azure servers. When you upload the certificate you will see it in the list of installed certificates:

After you upload the certificate successfully to the Windows Azure server, you have to go back to the “Account” tab and copy the Subscription ID to your clipboard:

Going back to Visual Studio’s “Cloud Service Management Authentication” window, you have to paste your subscription ID onto the field for it:

At the last step of configuring our account, we have to define a meaningful name for it, so when we see in the drop down list of installed Credentials, we will know what service is this account for. For this project I chose the name “WindowsAzureCloudDemoCert”. When we are ready and hit OK button, we will go back to the “Publish Cloud Service” window, we will select “WindowsAzureCloudDemoCert” from Credentials drop down. An authentication attempt will be made to the Azure service to validate Credentials. If everything is fine we will see details for our account, such as Account name, Slots for deployment (production & stating), Storage accounts associated with that service account:

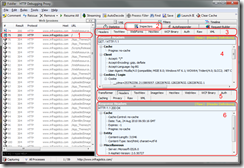

When you hit OK a publish process will start. A successfull publish process finishes for about 10 minutes. A friendly window within Visual Studio “Windows Azure Activity Log” will show the process steps and history:

Well, as I said there is initial process of configuring credentials. Once you set up everything all right, the publish process will be just choosing the credentials and Hosted Service Slot for deployment (production or staging).

Have a great time developing for Windows Azure!